We will have 3 instance of Mongo In order to achieve the Replication in MongoDB.

C:\Program Files\MongoDB\Server\4.0\bin>mongod --smallfiles --oplogSize 50 --replSet test --port 27017 --dbpath C:\data\db

C:\Program Files\MongoDB\Server\4.0\bin>mongod --smallfiles --oplogSize 50 --replSet test --port 27018 --dbpath D:\data\db

C:\Program Files\MongoDB\Server\4.0\bin>mongod --smallfiles --oplogSize 50 --replSet test --port 27019 --dbpath E:\data\db

C:\>cd "\Program Files\MongoDB\Server\4.0\bin"

C:\Program Files\MongoDB\Server\4.0\bin>mongo

MongoDB shell version v4.0.2

connecting to: mongodb://127.0.0.1:27017

MongoDB server version: 4.0.2

Server has startup warnings:

2019-01-24T10:26:02.197+0530 I CONTROL [initandlisten]

2019-01-24T10:26:02.197+0530 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the d

2019-01-24T10:26:02.198+0530 I CONTROL [initandlisten] ** Read and write access to data and confi

2019-01-24T10:26:02.198+0530 I CONTROL [initandlisten]

2019-01-24T10:26:02.198+0530 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost.

2019-01-24T10:26:02.198+0530 I CONTROL [initandlisten] ** Remote systems will be unable to connec

2019-01-24T10:26:02.198+0530 I CONTROL [initandlisten] ** Start the server with --bind_ip <addres

2019-01-24T10:26:02.198+0530 I CONTROL [initandlisten] ** addresses it should serve responses fro

2019-01-24T10:26:02.198+0530 I CONTROL [initandlisten] ** bind to all interfaces. If this behavio

2019-01-24T10:26:02.198+0530 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disa

2019-01-24T10:26:02.198+0530 I CONTROL [initandlisten]

C:\Program Files\MongoDB\Server\4.0\bin>mongo --host 127.0.0.1:27018

MongoDB shell version v4.0.2

connecting to: mongodb://127.0.0.1:27018/

MongoDB server version: 4.0.2

Server has startup warnings:

2019-01-24T10:39:24.775+0530 I CONTROL [initandlisten]

2019-01-24T10:39:24.775+0530 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2019-01-24T10:39:24.775+0530 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2019-01-24T10:39:24.775+0530 I CONTROL [initandlisten]

2019-01-24T10:39:24.776+0530 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost.

2019-01-24T10:39:24.776+0530 I CONTROL [initandlisten] ** Remote systems will be unable to connect to this server.

2019-01-24T10:39:24.776+0530 I CONTROL [initandlisten] ** Start the server with --bind_ip <address> to specify which IP

2019-01-24T10:39:24.777+0530 I CONTROL [initandlisten] ** addresses it should serve responses from, or with --bind_ip_all to

2019-01-24T10:39:24.777+0530 I CONTROL [initandlisten] ** bind to all interfaces. If this behavior is desired, start the

2019-01-24T10:39:24.777+0530 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disable this warning.

2019-01-24T10:39:24.778+0530 I CONTROL [initandlisten]

MongoDB Enterprise test:SECONDARY>

C:\Program Files\MongoDB\Server\4.0\bin>mongo --host 127.0.0.1:27019

MongoDB shell version v4.0.2

connecting to: mongodb://127.0.0.1:27019/

MongoDB server version: 4.0.2

Server has startup warnings:

2019-01-30T12:06:55.398+0530 I CONTROL [initandlisten]

2019-01-30T12:06:55.398+0530 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2019-01-30T12:06:55.398+0530 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2019-01-30T12:06:55.399+0530 I CONTROL [initandlisten]

2019-01-30T12:06:55.399+0530 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost.

2019-01-30T12:06:55.399+0530 I CONTROL [initandlisten] ** Remote systems will be unable to connect to this server.

2019-01-30T12:06:55.400+0530 I CONTROL [initandlisten] ** Start the server with --bind_ip <address> to specify which IP

2019-01-30T12:06:55.400+0530 I CONTROL [initandlisten] ** addresses it should serve responses from, or with --bind_ip_all to

2019-01-30T12:06:55.400+0530 I CONTROL [initandlisten] ** bind to all interfaces. If this behavior is desired, start the

2019-01-30T12:06:55.400+0530 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disable this warning.

2019-01-30T12:06:55.401+0530 I CONTROL [initandlisten]

MongoDB Enterprise test:SECONDARY>

MongoDB Enterprise test:PRIMARY> rs.initiate();

{

"operationTime" : Timestamp(1548307074, 1),

"ok" : 0,

"errmsg" : "already initialized",

"code" : 23,

"codeName" : "AlreadyInitialized",

"$clusterTime" : {

"clusterTime" : Timestamp(1548307074, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise test:PRIMARY> rs.add(127.0.0.1:27018);

2019-01-24T10:48:31.528+0530 E QUERY [js] SyntaxError: missing ) after argument list @(shell):1:12

MongoDB Enterprise test:PRIMARY> rs.add("127.0.0.1:27018");

{

"ok" : 1,

"operationTime" : Timestamp(1548307139, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1548307139, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise test:PRIMARY> rs.add(127.0.0.1:27019);

MongoDB Enterprise test:PRIMARY> rs.conf();

{

"_id" : "test",

"version" : 2,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "localhost:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "127.0.0.1:27018",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5c416b3d712e9a0ff1ac72b7")

}

}

MongoDB Enterprise test:PRIMARY> rs.status();

{

"set" : "test",

"date" : ISODate("2019-01-24T05:20:36.793Z"),

"myState" : 1,

"term" : NumberLong(7),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1548307234, 1),

"t" : NumberLong(7)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1548307234, 1),

"t" : NumberLong(7)

},

"appliedOpTime" : {

"ts" : Timestamp(1548307234, 1),

"t" : NumberLong(7)

},

"durableOpTime" : {

"ts" : Timestamp(1548307234, 1),

"t" : NumberLong(7)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1548307194, 1),

"members" : [

{

"_id" : 0,

"name" : "localhost:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1475,

"optime" : {

"ts" : Timestamp(1548307234, 1),

"t" : NumberLong(7)

},

"optimeDate" : ISODate("2019-01-24T05:20:34Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1548305762, 1),

"electionDate" : ISODate("2019-01-24T04:56:02Z"),

"configVersion" : 2,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "127.0.0.1:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 97,

"optime" : {

"ts" : Timestamp(1548307234, 1),

"t" : NumberLong(7)

},

"optimeDurable" : {

"ts" : Timestamp(1548307234, 1),

"t" : NumberLong(7)

},

"optimeDate" : ISODate("2019-01-24T05:20:34Z"),

"optimeDurableDate" : ISODate("2019-01-24T05:20:34Z"),

"lastHeartbeat" : ISODate("2019-01-24T05:20:35.574Z"),

"lastHeartbeatRecv" : ISODate("2019-01-24T05:20:36.616Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "localhost:27017",

"syncSourceHost" : "localhost:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 2

}

],

"ok" : 1,

"operationTime" : Timestamp(1548307234, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1548307234, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

MongoDB Enterprise test:PRIMARY>

MongoDB Enterprise test:PRIMARY> use exampleDB

switched to db exampleDB

MongoDB Enterprise test:PRIMARY> for (var i = 0; i <= 10; i++) db.exampleCollection.insert( { x : i } )

WriteResult({ "nInserted" : 1 })

MongoDB Enterprise test:PRIMARY> db.exampleCollection.find().pretty();

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f5f"), "x" : 0 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f60"), "x" : 1 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f61"), "x" : 2 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f62"), "x" : 3 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f63"), "x" : 4 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f64"), "x" : 5 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f65"), "x" : 6 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f66"), "x" : 7 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f67"), "x" : 8 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f68"), "x" : 9 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f69"), "x" : 10 }

MongoDB Enterprise test:PRIMARY>

Now go to the secondary and check if the data is replicated.

MongoDB Enterprise test:SECONDARY> db.getMongo().setSlaveOk()

MongoDB Enterprise test:SECONDARY> db.exampleCollection.find().pretty();

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f5f"), "x" : 0 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f61"), "x" : 2 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f63"), "x" : 4 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f65"), "x" : 6 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f62"), "x" : 3 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f67"), "x" : 8 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f69"), "x" : 10 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f64"), "x" : 5 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f68"), "x" : 9 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f60"), "x" : 1 }

{ "_id" : ObjectId("5c494c1fa8a110e1bca27f66"), "x" : 7 }

MongoDB Enterprise test:SECONDARY>

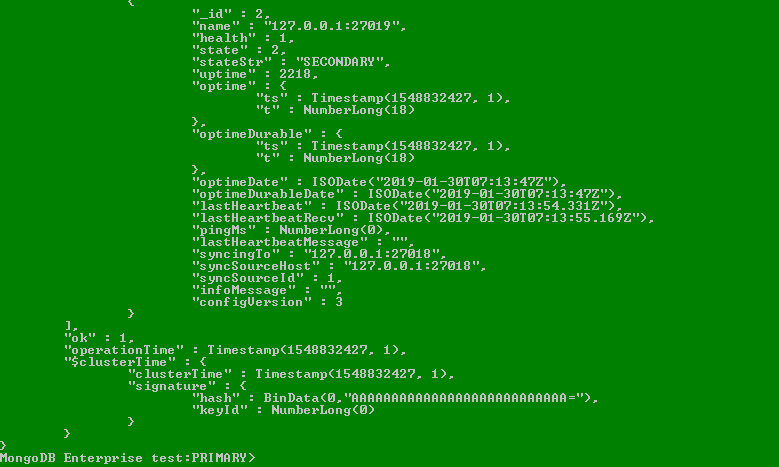

Check on the another secondary .

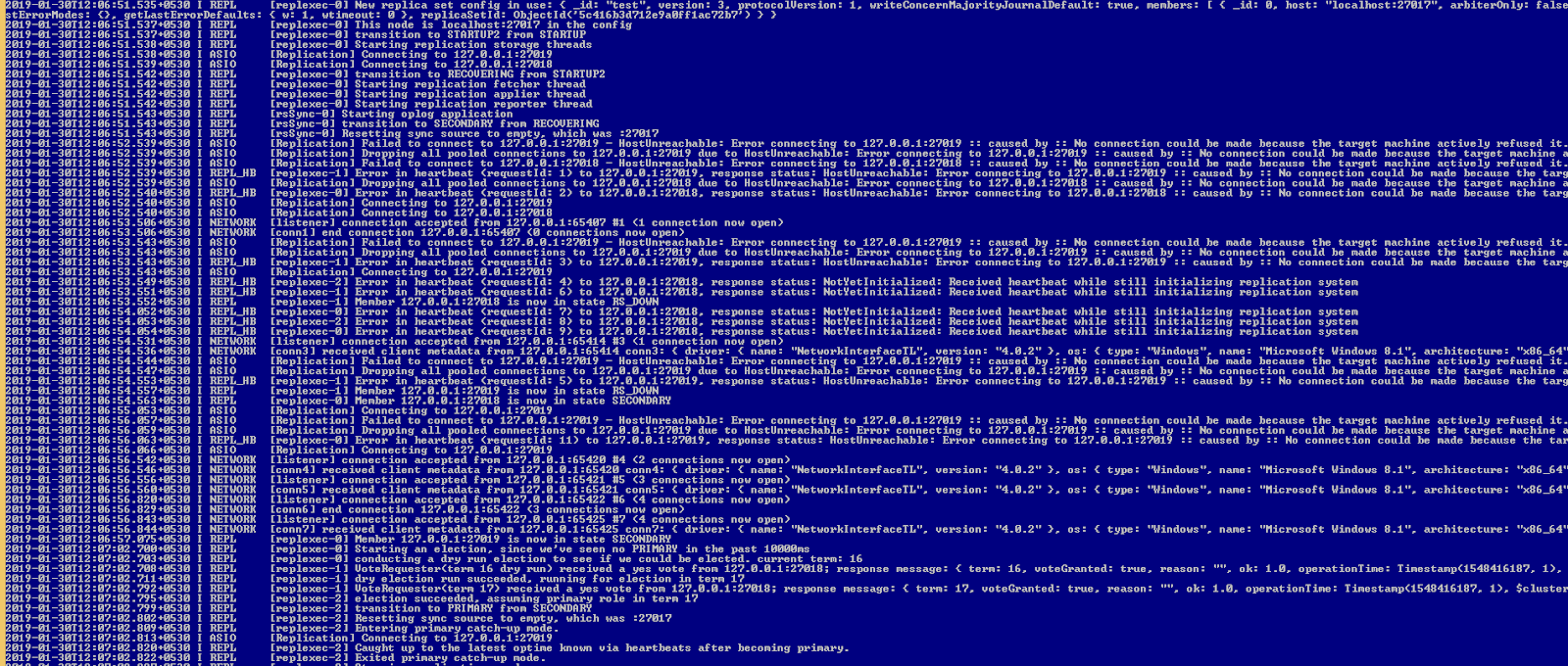

To test the fail-over lets us kill the primary now. Check the rs.status() on another secondary.

Its shows the earlier primary is not available and the one running on the 27018 becomes the primary.

Let start the 27017 again.